Study Introduces Innovative Method to Decode Complex Neural Data

A new machine learning framework helps disentangle information patterns in the brain

Artificial intelligence, and specifically machine learning techniques, are allowing scientists to better analyze recordings of brain activity and disentangle neural patterns.

Image: Adobe stock

A key goal of neuroscience is understanding how the brain processes disparate streams of information from both the external world and from within the body. When neuroscientists measure activity within the brain, these incoming streams of information are often “entangled,” making it difficult to identify what specific inputs a neuron or group of neurons is responding to. “Disentangling” the information in these neural measurements is a key challenge for neuroscientists trying to understand how the brain makes sense of the world.

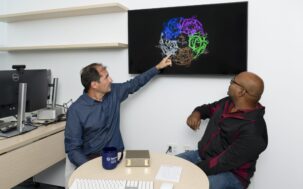

Artificial intelligence, and specifically machine learning techniques, are allowing scientists to better analyze recordings of brain activity and disentangle neural patterns. A new study, recently published in the journal Neuron and led by Demba Ba, Kempner Institute associate faculty member and Gordon McKay Professor of Electrical Engineering at SEAS, introduces a new machine learning framework for analyzing and disentangling complex neural data.

This new computational framework, called Deconvolutional Unrolled Neural Learning (DUNL), allows scientists to decompose a time series of neural signals into simple elements, allowing scientists to better understand how a neuron, or a population of neurons, responds to external events.

Breaking complex neural signals into “kernels”

DUNL is a neural network model that analyzes time series measurements recorded by experimental neuroscientists and decomposes them into units called “kernels.” These kernels are essentially fundamental components of the neural data, building blocks that represent the major pieces of information reaching the neurons (or neuron populations) in each dataset.

The DUNL framework can be thought of like a sound engineer pulling apart elements of an audio recording of a music concert. The recording might include the sound of the music intermixed with sounds of people talking, clinking glasses, and other background noise. The sound engineer can listen to the recording and pick out the different sources of sound, knowing that the sound of the music is the fundamental component, and the other noises are background components. The DUNL framework emulates this process, allowing neuroscientists to pick out the most important kernels of information reflected in a neural measurement dataset.

“Discovering these components in neural data will contribute to further discovery because there are many neurons that respond to multiple events, such as odors, sounds, and social encounters, a phenomenon we call multiplexing of neural activity,” explains Sara Matias, one of the co-first authors of the paper, and a research associate in the lab of co-author Naoshige Uchida, a Kempner affiliate faculty member and professor in the Department of Cellular and Molecular Biology. “When animals navigate in complex environments, it is very hard to disentangle what are the fundamental contributions of each one of these components, especially if these events co-occur — for instance a particular smell with a social encounter. DUNL helps us parse out each one of these contributions in the neural responses, thus informing us what stimuli drive each neuron.”

Enhancing interpretability with algorithm unrolling

What distinguishes DUNL from other, more traditional, machine learning frameworks for analyzing complex neural data?

One standout feature is the researchers’ pioneering use of a technique called “unrolling” to design the layers of neural network architecture and optimize performance, allowing the network to perform better with every successive layer.

“This unrolling process is what makes DUNL interpretable and understandable,” says Bahareh Tolooshams, a first author on the paper who is currently based at the California Institute of Technology.

Interpretability has long been a problem with artificial neural networks, which are often described as black boxes. While they can discover latent patterns in data and use it for prediction, it is a challenge for humans to extract and understand these patterns. DUNL breaks up neural activity into kernels that are much more interpretable than the results of typical deep learning methods.

“DUNL presents a new approach in neuroscience by embedding data constraints and structures directly into the deep neural network architecture for increased human-interpretability,” says Tolooshams. “Unlike black-box AI models, DUNL functions as a white-box framework, making it easier for experimentalists to study the underlying properties of the data itself, rather than trying to interpret the network’s inner functionality.”

Overcoming limitations of dataset size in neuroscience

In addition to enhanced interpretability, DUNL offers advances in terms of the amount of data required for good performance. Typically, artificial neural networks require very large amounts of data for good performance, which can be a limiting factor for neuroscientists. Importantly, DUNL is capable of good performance without requiring large datasets.

So, on both these important fronts — interpretability and dataset size — DUNL offers a notable advancement over existing techniques. The model is a promising tool for neuroscientists eager to leverage the power of machine learning to better understand neural activity.

“As a pioneering development for the algorithm unrolling and interpretable deep learning frameworks, this model will be very helpful as a tool for the experimental neuroscience community,” says Matias.

To illustrate this potential, the paper demonstrates the use of DUNL in a wide variety of experimental contexts. The code for DUNL is available online, so experimentalists can try it out on their own data.

The DUNL framework was developed by Ba and fellow members of the Computation, Representation, and Inference in Signal Processing (CRISP) Group at SEAS, alongside a multidisciplinary team that included members of the labs of Naoshige Uchida and Kempner affiliate faculty member Venkatesh Murthy, who is Raymond Leo Erikson Life Sciences Professor of Molecular and Cellular Biology and Paul J. Finnegan Family Director, Center for Brain Science. Uchida and Murthy are co-authors on the Neuron paper. The team also included collaborators from the California Institute of Technology, Brown University, and a newly appointed Assistant Professor at McGill University, Paul Masset, former postdoctoral fellow at the Center for Brain Science, who led the efforts to start this multi-lab collaboration.

About the Kempner Institute

The Kempner Institute seeks to understand the basis of intelligence in natural and artificial systems by recruiting and training future generations of researchers to study intelligence from biological, cognitive, engineering, and computational perspectives. Its bold premise is that the fields of natural and artificial intelligence are intimately interconnected; the next generation of artificial intelligence (AI) will require the same principles that our brains use for fast, flexible natural reasoning, and understanding how our brains compute and reason can be elucidated by theories developed for AI. Join the Kempner mailing list to learn more, and to receive updates and news.

PRESS CONTACT:

Deborah Apsel Lang | (617) 495-7993