Science of AI

What We Do

AI is advancing at an unprecedented pace, leaving significant gaps in our understanding of the fundamental principles fueling its success. The increasing training complexity and size of AI models pose significant challenges for scientists who study the underlying principles at work in ML models. We are working to develop and test new scientific, engineering, and mathematical principles underpinning the numerous technological breakthroughs in AI and deep learning.

Representational Learning

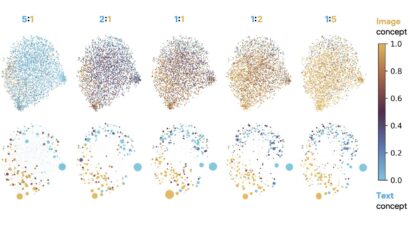

Probing the internal representations of artificial neural networks is a pivotal challenge in machine learning. In recent work at the Kempner, researchers used sparse autoencoders to show that vision-language embeddings are built from a compact, stable dictionary of single-modality concepts that link images and text through cross-modal bridges. This work provides new insight into how these models encode shared semantic structure across modalities.

UMAP visualization of the SAE concept spaces under different image–text data mixtures. Color indicates the modality score of each concept.

Source: Papadimitriou, I. et al. "Interpreting the Linear Structure of Vision-Language Model Embedding Spaces." aXriv2504.11695 (2025).

Research Projects

Infrastructure for Studying LLMs and Generative AI

Accelerating RL for LLM Reasoning with Optimal Advantage Regression

Researchers at the Kempner propose a new RL algorithm that estimates the optimal value function offline from the reference policy and performs on-policy updates using only one generation per prompt.

In-Context Learning

Solvable Model of In-Context Learning Using Linear Attention

Kempner institute members provide a sharp characterization of in-context learning (ICL) in an analytically-solvable model.

Mitigation of Training Instabilities

Characterization and Mitigation of Training Instabilities in Microscaling Formats

Kempner researchers uncover consistent training instabilities when using new, highly efficient low-precision formats, which has implications for the development of next-generation AI