AI and the Brain

What We Do

A core precept of the Kempner Institute is that AI and ML will advance our understanding of the human brain and that insights learned from the study of the brain will generate new strategies to better understand and potentially advance AI architectures and capabilities. AI models of brain computation, learning, and memory, built on experimental data from humans and animals, will yield testable hypotheses. Our researchers will use these virtual models to create new insights in cognition and computation that can advance our fundamental understanding of the brain and how it is perturbed in disease.

Building biologically-grounded models of neural computation

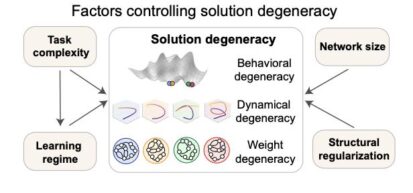

A team of Kempner researchers, including graduate fellow Ann Huang and faculty member Dr. Kanaka Rajan, introduce a versatile new framework for measuring and controlling solution degeneracy in task-trained recurrent neural networks. By analyzing over 3,400 RNNs across four neuroscience-relevant tasks, they demonstrate that task complexity, feature learning, network size, and regularization all shape how networks converge on different internal solutions (e.g. solution degeneracy), even when their performance looks identical. The Rajan team’s new framework offers a principled, interpretable way to tailor RNN architecture for building biologically-grounded models of neural computation.

Key factors shape degeneracy across behavior, dynamics, and weights.

Source: Huang et al. "Measuring and Controlling Solution Degeneracy across Task-Trained Recurrent Neural Networks." aXriv preprint 2410.03972v2 (2024).

Research Projects

Reinforcement Learning

ANN-like Synapses in the Brain Mediate Online Reinforcement Learning

Researchers at the Kempner Institute have found a synapse in the brain that switches between more excitatory and inhibitory in an experience-dependent manner.

Exploring Core Mechanisms of Human Visual Perception

A Feedforward Mechanism for Human-like Contour Integration

A team at the Kempner shows that feedforward convolutional neural networks (CNNs) can perform human-like contour integration.

Interpretive Models of Neural Activity

Mechanistic Interpretability: A Challenge Common to Both Artificial and Biological Intelligence

Researchers at the Kempner have developed a family of interpretable models to explain neural activity in structured settings.