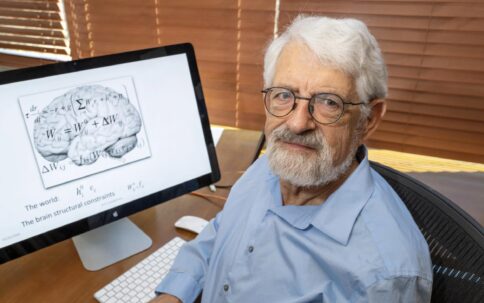

Haim Sompolinsky

Kempner Associate Faculty

Professor of Molecular and Cellular Biology and of Physics (in Residence)

Contact Information

Assistants

Subjects I Teach:

- I teach Computational Neuroscience (Neuro 231) and Statistical Mechanics of Spin Glasses and Neural Networks (Physics 265)

About

Haim Sompolinsky, a theoretical neuroscientist, began his career in theoretical physics, earning his PhD from Bar-Ilan University in 1980. He undertook postdoctoral research at Harvard University’s Physics Department before joining Bar-Ilan University as an Associate Professor in 1982. By 1986, he was a Professor of Physics at the Hebrew University. Sompolinsky’s tenure at Bell Laboratories lasted until 2000, and since 2006, he has been a Visiting Professor at Harvard’s Center for Brain Science.

Sompolinsky’s early work on spin glasses laid the foundation for his mid-1980s shift to neural network theory pioneering the new interdisciplinary field of theoretical neuroscience. He helped found the Interdisciplinary Center for Neural Computation (ICNC) at Hebrew University in 1992 and later the Edmond and Lily Safra Center for Brain Sciences. Since 2022, he has held a professorship in Molecular & Cellular Biology and Physics (in Residence) at Harvard University and directs the Swartz Program for Theoretical Neuroscience there. Among his honors are the 2011 Swartz Prize for Theoretical and Computational Neuroscience, the 2016 EMET Prize for Life Science-Brain Research, and the 2022 Gruber Neuroscience Prize.

Research Focus

Sompolinsky’s current research focuses on theoretical neuroscience, deep learning, and natural and artificial intelligence. His research explores the theoretical foundations of cognitive functions in both biological brains and artificial intelligence systems. He applies principles of statistical mechanics, nonlinear dynamics, disordered systems, and machine learning to investigate the interplay between structure, dynamics, and computation in neural circuits. His work addresses topics such as working and long-term memory, chaos and variability in neuronal circuits, excitation-inhibition balance, neural attractor manifolds, learning in spiking networks, and the principles of neural population codes. His recent collaborations include work with Florian Engert and Jeff Lichtman on neural circuits of zebrafish larvae, and with Adi Mizrahi on perceptual learning in the auditory system.

Currently, Sompolinsky focuses on deep neural networks and deep learning. This encompasses the thermodynamic theory of generalization and feature learning in wide deep networks, concept representation and few-shot learning in artificial systems and brain hierarchies, particularly in vision and language. Additionally, he examines mechanisms enabling continual and life-long learning and memory, and the application of Generative AI in modeling neural processes, learning, and memory of relational and structured knowledge.