The Power of Scale in Machine Learning

The idea of scaling in machine learning is that the quality of a model improves with the quantity of resources invested in it. When it comes to AI technology, bigger is usually better, at least for the current generation of ML models.

Image by Yohn J. John using Adobe Illustrator and code created by Claude.

AI is currently advancing at an unprecedented clip, but many of the mathematical methods that are enabling these advances were developed decades ago. The big breakthroughs in machine learning (ML) and AI during the 2010s and 2020s were as much a result of scaling up the old methods as developing new ones. When it comes to AI technology, bigger is usually better, at least for the current generation of ML models.

This Kempner Byte looks at how scale became crucial to AI and ML. We’ll learn about the discovery of mathematical laws of scaling and look at how they inform the development and study of ML models, especially artificial neural networks (ANNs), which are software programs that are loosely inspired by the structure of brains.

The idea of scaling in ML is that the quality of a model improves with the quantity of resources invested in it. The quality of an ANN is assessed in terms of how low its error rate or “loss” is. And, the three main resources that show the scale of the investment and determine its relative quality are: 1) the size of the model in terms of the number of adjustable parameters [see How is the size of a model assessed?”]; 2) the size of the dataset used to train the network; and 3) the amount of computational time the model spends on training with a particular dataset. [1]

Increasing, or scaling-up, any of these elements not only improves the accuracy of an ANN, it can also lead to qualitative leaps in ability. This was illustrated in dramatic fashion by GPT, the pathbreaking large language model developed by OpenAI, and arguably the world’s most famous ANN. GPT-3 was over 100 times bigger than GPT-2 but was otherwise based on the same basic type of architecture and training procedure. Powerful and unexpected abilities emerged with the increased scale of GPT-3, including the ability to solve cognitive problems and generate working computer code.

The rise of scaling laws in neural network research

The first big ANN breakthrough, introduced in 2012, was AlexNet, an image classification model that could be shown a new image and name it using a set of predefined labels. In terms of its basic architecture and learning algorithm, AlexNet was similar to its predecessors from the 1980s and 1990. Scale was crucial to the success of AlexNet: making the model smaller by reducing the number of artificial neurons degraded performance significantly. The other key factor was the size of the dataset, which was much larger than datasets used in prior decades.

AlexNet signaled to ML researchers that ANNs, which had been around for many years, were now ready for prime time. At the same time, two key developments were unfolding. First, computing resources began to enable much larger and more flexible models. Second, the available datasets for training ANNs were reaching ‘critical mass’ for a variety of tasks. This was thanks in part to digital cameras and the ever-expanding storage capacity of computers, vast numbers of images had accumulated by the early 2000s. And, thanks to the internet, the labor-intensive process of labeling images to specify what they contain could be done at scale by people all around the world.

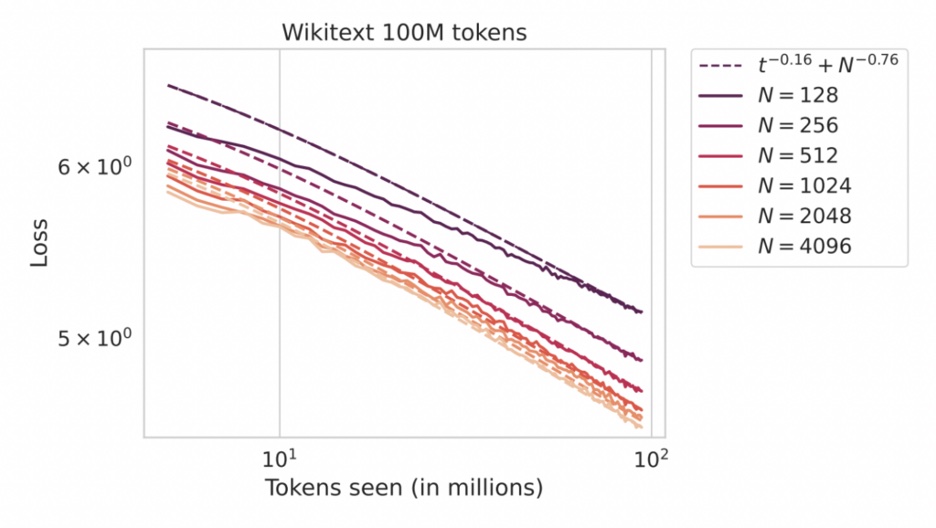

The benefits of scaling up ANNs and their training data led naturally to an important theoretical question. Was there a way to predict the effects of scaling up a model or a dataset? Researchers sought to uncover an underlying mathematical pattern behind the scaling phenomenon. When researchers began doing experiments on scaling they discerned a trend: for many types of tasks, the error rate of an ANN decreases in a predictable way with the increase of the size of the model, the dataset size, or the amount of computation performed during training. The shape of this predictable trend when graphed is captured mathematically by what is known as a neural scaling law. Once the mathematical form has been identified, researchers can forecast the performance of a scaled-up model before it has been trained, by extrapolating the trend to future results with the help of the scaling law.

Neural scaling laws have practical applications in ML beyond just predicting how scaling might improve a given ANN. Scaling laws can help researchers design their models and decide how long to train them, given some constraints like the dataset size or computational resources. So if a researcher wants a model to reach a certain level of accuracy within a certain timeframe, a scaling law can offer an estimate of how big the model should be. And if a researcher is working with a model of a fixed size, a scaling law can offer a sense of how big of a dataset would be required to reach a desired level of accuracy.

These laws can be used for a variety of practical purposes. For example, if you have limited data you might want to scale up the model size to get a certain level of accuracy. Alternatively, if you have a fixed amount of money to spend, you can figure out the optimal model size given your budget for data and GPU computation. The development of the popular open-source LLM Llama-3 involved this sort of use of scaling laws.

Scale and the “bitter lesson”

The power of scaling played a major role in the deep learning revolution kickstarted by AlexNet. Scaling gave researchers the confidence to try larger versions of the models and training algorithms that had already been developed and worked to some degree in the past, rather than designing new model architectures.

Researchers began to note that scaled-up versions of simple models did much better than more complex models derived from theories of human intelligence. In 2019, this observation was dubbed the “bitter lesson” by computer scientist Richard Sutton, one of the pioneers of the ML subfield of reinforcement learning. He advised AI researchers to use general-purpose methods that “continue to scale with increased computation even as the available computation becomes very great.” [2]

The reliability of scalable, general-purpose ML methods also encouraged the development of a vast eco-system of hardware and software resources optimized for large-scale ML. [3] As more researchers at universities and businesses began to use the same basic ML framework, a thriving ML community emerged, creating the necessary conditions for standardized and well-maintained toolkits such as PyTorch, the open-source software package that most researchers use to code up their ANNs.

The future of scaling research

The bitter lesson notwithstanding, there may be limits to the simple strategy of scaling up models, datasets, and computation. In fact, scaling laws themselves predict this. The mathematical relationship between loss and model size, for example, shows a tapering off: above a certain scale, there are diminishing returns from further increases in model size.

Some researchers are already sounding the alarm. Ilya Sutskever, one of the pioneers in the study of neural scaling laws and a former OpenAI researcher instrumental in the development of ChatGPT, expects that researchers will soon start looking for the next big thing in ML. “The 2010s were the age of scaling, now we’re back in the age of wonder and discovery once again,” Sutskever told the Reuters news agency in a recent interview [4].

Researchers now debate whether we have already reached the point of diminishing returns on scale for different types of ML models. One interesting complication is that in certain domains researchers are running out of fresh data to train their models. Some researchers estimate that LLMs may soon have been trained on all available human text ever written! [5]

But even if some models are hitting a performance plateau (or running out of data), it is unlikely that the concept of scaling will cease to be important for the field of ML. New types of scaling patterns are still being discovered. For example, the accuracy of a recently-developed LLM procedure called “test-time compute,” in which task-specific computations are performed after the initial training stages, appears to follow a scaling law. This has practical implications for improving the functionality of already-trained models.

Researchers are also incorporating new factors into scaling theory. Kempner associate faculty member Cengiz Pehlevan, for example, has been involved in several projects that expand the scope of scaling research. One recent study by Pehlevan and collaborators showed that the precision with which model parameters are encoded has an impact on scaling laws. [For more on what ‘parameters’ are, see sidebar How is the size of a model assessed?]

Understanding the relationship among different scaling laws is also a rich domain of investigation. A recent study by Kempner researchers showed how a scaling law derived for one model can be used to infer the scaling law for a different model. [See the blog post Loss-to-Loss Prediction: Scaling Laws for all Datasets for more information on this work.]

So, in the end, scaling research is about much more than “bigger is better.” It’s about leveraging existing models in exciting new ways, harnessing resources to make huge strides in advancing capability and performance of models, and opening entirely new frontiers in the theory and application of ML.

Scaling research at the Kempner Institute

Interested in scaling laws? Here’s a selection of Deeper Learning blog posts on the topic by Kempner researchers, along with the preprints discussed in the posts.

- Loss-to-Loss Prediction: Scaling Laws for all Datasets. Brandfonbrener, D., Anand, N., Vyas, N., Malach, E., & Kakade, S. (2024). Loss-to-Loss Prediction: Scaling Laws for All Datasets. arXiv preprint arXiv:2411.12925. https://doi.org/10.48550/arXiv.2411.12925

- How Does Critical Batch Size Scale in Pre-training? (Decoupling Data and Model Size). Zhang, H., Morwani, D., Vyas, N., Wu, J., Zou, D., Ghai, U., Foser, D. & Kakade, S. (2024). How Does Critical Batch Size Scale in Pre-training?. arXiv preprint arXiv:2410.21676. https://doi.org/10.48550/arXiv.2410.21676

- Infinite Limits of Neural Networks. Vyas, N., Atanasov, A., Bordelon, B., Morwani, D., Sainathan, S., & Pehlevan, C. (2024). Feature-learning networks are consistent across widths at realistic scales. Advances in Neural Information Processing Systems, 36. https://doi.org/10.48550/arXiv.2305.18411

- Bordelon, B., Noci, L., Li, M. B., Hanin, B., & Pehlevan, C. (2023). Depthwise hyperparameter transfer in residual networks: Dynamics and scaling limit. arXiv preprint arXiv:2309.16620. https://doi.org/10.48550/arXiv.2410.21676

- A Dynamical Model of Neural Scaling Laws. Bordelon, B., Atanasov, A., & Pehlevan, C. (2024). A dynamical model of neural scaling laws. arXiv preprint arXiv:2402.01092. https://doi.org/10.48550/arXiv.2309.16620

About this series

Kempner Bytes is a series of explainers from the Kempner Institute, related to cutting edge natural and artificial intelligence research, as well as the conceptual and technological foundations of that work.

About the Kempner Institute

The Kempner Institute seeks to understand the basis of intelligence in natural and artificial systems by recruiting and training future generations of researchers to study intelligence from biological, cognitive, engineering, and computational perspectives. Its bold premise is that the fields of natural and artificial intelligence are intimately interconnected; the next generation of artificial intelligence (AI) will require the same principles that our brains use for fast, flexible natural reasoning, and understanding how our brains compute and reason can be elucidated by theories developed for AI. Join the Kempner mailing list to learn more, and to receive updates and news.

- It is worth pointing out that dataset size and computational time can be strongly correlated when the training data isn’t repeated, which is roughly the case with LLMs. In this case, computational time is entirely determined by the time it takes to go through the entire dataset once. But if there is repeated cycling through the same data (multiple “epochs”), these factors can differ since the amount of computation is determined by the independent choice of how many epochs to run. For scaling laws, researchers usually report patterns extracted from a single pass over dataset.[↩]

- Richard Sutton – “The Bitter Lesson”blog post:http://www.incompleteideas.net/IncIdeas/BitterLesson.html[↩]

- To learn more about resources designed for large-scale ML projects, check out the Kempner Computing Handbook.[↩]

- ‘OpenAI and others seek new path to smarter AI as current methods hit limitations’ https://www.reuters.com/technology/artificial-intelligence/openai-rivals-seek-new-path-smarter-ai-current-methods-hit-limitations-2024-11-11/[↩]

- “The AI revolution is running out of data. What can researchers do?” https://www.nature.com/articles/d41586-024-03990-2[↩]