From Models to Scientists: Building AI Agents for Scientific Discovery

October 09, 2025ToolUniverse is a framework for developing AI agents for science, often referred to as “AI scientists.” It provides an environment where LLMs interact with more than six hundred scientific tools, including machine learning models, databases, and simulators. ToolUniverse standardizes how AI models access and combine these tools, allowing researchers to develop, test, and evaluate AI agents for science.

Scientific discovery has always advanced through new methods and instruments. A recent development in this progress is the rise of AI agents. Built on large language models (LLMs), these systems extend beyond text generation to reasoning, planning, and acting toward goals. AI agents break complex problems into steps, formulate strategies, and use external resources such as databases, APIs, or simulators to collect evidence and perform analyses. They maintain short-term memory to track progress, adjust strategies through iteration, and self-correct when outputs deviate from expectations

Applying such agents to science opens a new frontier in computational research. Early systems show that LLMs can generate hypotheses, analyze experimental data, and coordinate specialized software to test ideas. Yet scientific inquiry demands integration across data types, models, and experimental modalities. The long-term goal is to build agents that plan and execute end-to-end workflows: designing experiments, running simulations, interpreting data, and updating hypotheses through feedback from results and human collaborators. Achieving this vision requires connecting an agent’s reasoning core to scientific databases, models, and laboratory systems so it can interact with biological, chemical, and physical processes. These advances point toward AI systems that engage in science not only as analytical engines but as computational co-scientists.

ToolUniverse ecosystem for developing AI agents for science

ToolUniverse is a framework for developing AI agents for science, often referred to as “AI scientists” (Figure 1). It provides an environment where LLMs interact with more than six hundred scientific tools, including machine learning models, databases, and simulators. Each tool is a callable instrument that an agent can use to perform scientific operations such as modeling molecular interactions, analyzing data, or synthesizing literature. ToolUniverse standardizes how AI models access and combine these tools, allowing researchers to develop, test, and evaluate AI agents for science.

These agents combine reasoning with action. To move from inference to experimentation, they must call external tools such as software packages, databases, or models that perform scientific functions beyond the model’s internal capabilities. These interactions often rely on a structured communication layer known as the Model Context Protocol (MCP). MCP defines a shared language between agents and computational tools, similar to an API standard for autonomous systems. It specifies how agents discover tool capabilities, request parameters, validate input and output formats, and execute tools as part of a reasoning loop. MCP provides a foundation for general tool use, but it does not prescribe how reasoning agents should coordinate multiple tools, interpret outputs, or handle the specialized data formats common in scientific workflows. These additional layers are required for agents to function in research contexts where results must be reproducible and interpretable.

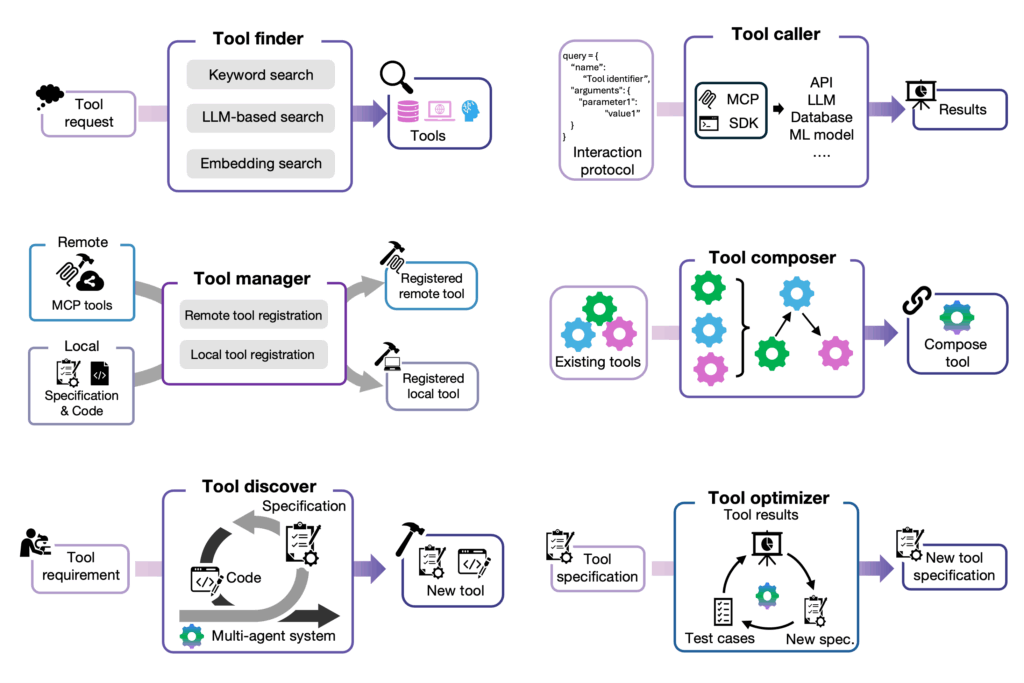

ToolUniverse introduces a scientific protocol that extends beyond the capabilities of MCP. Much like HTTP standardized how computers communicate over the internet, ToolUniverse defines how agents coordinate and execute scientific reasoning across tools (Figure 2). Its architecture centers on two components: Tool Finder and Tool Caller. Tool Finder uses keyword search, language model reasoning, and vector-embedding retrieval to identify the most relevant tool for a specific task. This design allows an agent to search across hundreds of tools while maintaining an understanding of each tool’s purpose and data requirements. Tool Caller executes a tool by validating the query, invoking the selected tool, and returning results in a structured format. In contrast to MCP, which handles communication at the system level, ToolUniverse’s protocol introduces mechanisms for reasoning control, input validation, and output standardization across heterogeneous scientific tools. These components allow agents to compose, execute, and evaluate multi-step scientific workflows, turning language models into operational AI scientists that can act within real research environments.

Expanding the capabilities of AI agents for scientific discovery

ToolUniverse introduces four components that make it possible to build AI agents for science: Tool Manager, Tool Composer, Tool Discover, and Tool Optimizer (Figure 3). They support the complete cycle of scientific reasoning. Each defines a mechanism that connects an LLM to the computational and experimental tools used in science. These components turn ToolUniverse into a programmable environment for AI scientists.

Tool Manager provides a registration protocol for integrating tools into ToolUniverse. Local tools are added through short specifications that define their purpose, parameters, and outputs. Remote tools connect through the MCP, which provides network-based access to software packages and servers without exposing internal code or data. The manager validates tool definitions and adds them to a unified schema so that any agent can call them in a consistent format. By supporting both local and remote integration, it allows AI agents to work across open and private environments. In practice, this enables agents to connect to new databases, simulators, or analysis packages as they appear.

Tool Composer allows agents to assemble workflows from multiple tools. It defines how outputs from one tool become inputs to another and how conditional execution or feedback loops are handled. This creates executable pipelines that can model tasks such as screening compounds, processing omics data, or analyzing literature. For AI agents, this capability moves from single-step tool use to multi-step reasoning, where an agent can plan and execute full experiments or analyses.

Tool Discover generates new tools from natural language descriptions. It converts a text request into a structured specification, creates the corresponding code, tests the implementation, and refines it until it meets correctness criteria. This process uses agentic feedback loops that compare the tool’s intended behavior with actual results. Tool Discover allows ToolUniverse to grow continuously without manual coding. For AI agents, it provides a path from recognizing missing functionality to creating new capabilities that fill those gaps.

Tool Optimizer improves existing tools by comparing their specifications with real behavior. It creates test cases, runs the tools, analyzes discrepancies, and updates definitions and documentation. This process standardizes and simplifies tools over time. The optimizer maintains consistency across hundreds of tools so that AI agents can rely on predictable interfaces and outputs. In scientific use, this ensures that workflows remain reproducible and tools are used correctly.

Integrating tools with language and reasoning models

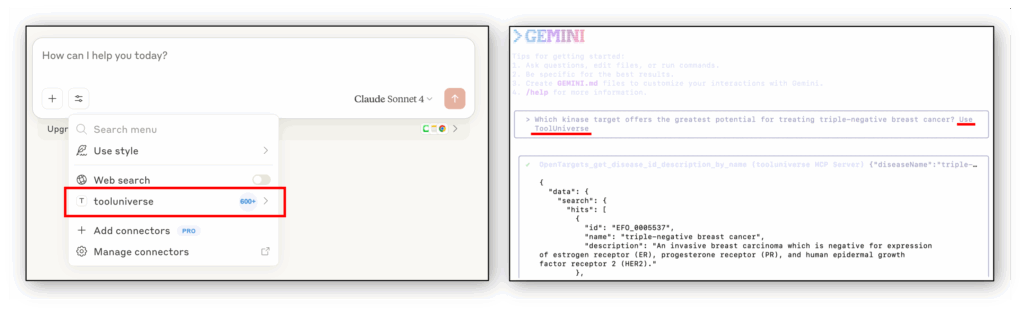

Large language models vary widely in architecture, scale, and capability. Some are small and efficient enough to run locally, while others are foundation models trained on trillions of tokens and deployed through external APIs. Beyond these, there are large reasoning models that extend standard language modeling with chains of thought, memory, or planning. Each model interprets instructions differently and varies in how it decomposes problems, handles symbolic inputs, or plans over long horizons. These differences affect how well a model can act as an AI agent. For example, small open models can run in constrained environments but have limited reasoning depth, while large closed models perform complex inference but depend on external serving infrastructure. Specialized models trained with reinforcement learning or expert demonstrations can focus on tasks, such as hypothesis testing or simulation control, but require a stable interface to interact with external systems.

ToolUniverse connects to any of these models through a standard set of callable operations (Figure 4). It defines each interaction as a function call that the model can use without modification to its weights or tokenizer. The connection is implemented through a lightweight wrapper that supplies tool definitions to the model’s context and interprets its outputs as structured tool calls. This design separates tool execution from model architecture, making it possible to evaluate and compare agents built from different LLMs, reasoning models, or reinforcement-trained systems under identical experimental conditions.

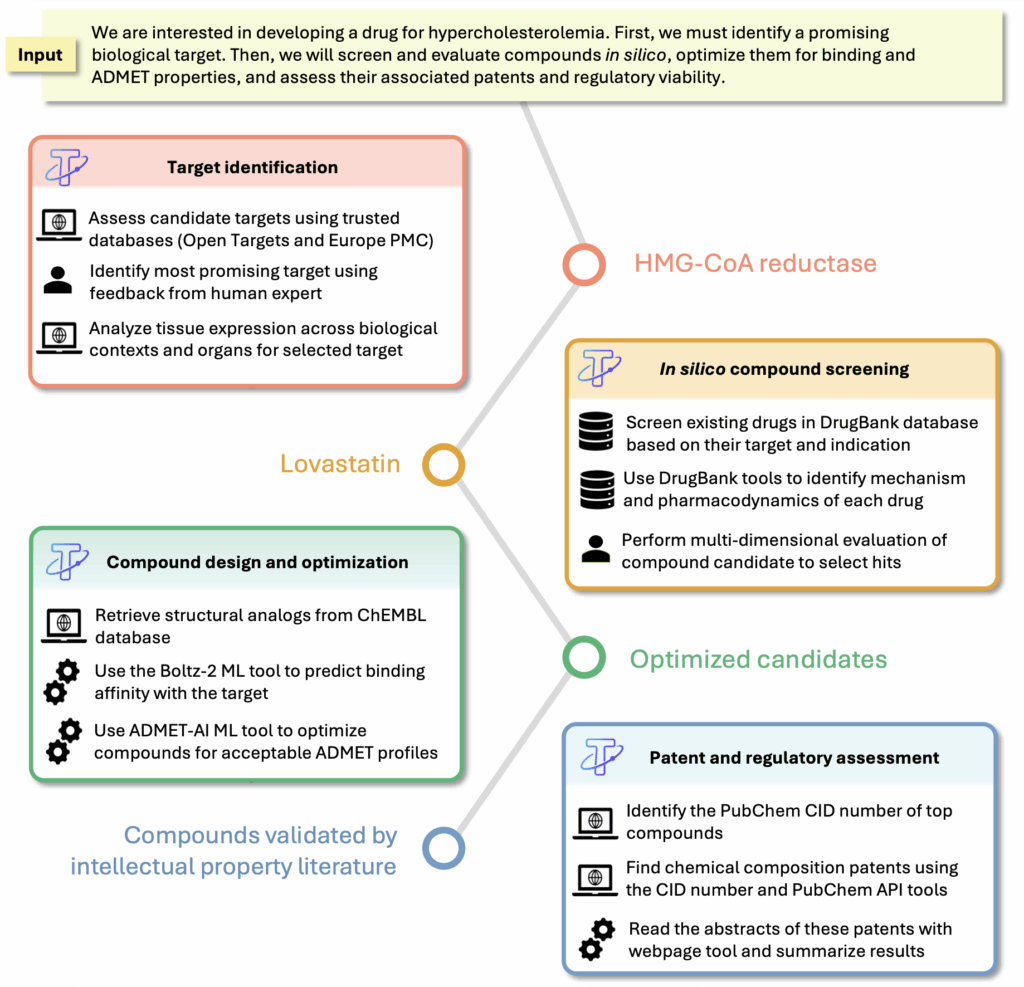

An AI scientist explores better compounds for high cholesterol

Computational drug discovery involves a sequence of steps that connect biology, chemistry, and pharmacology. Each step uses different data sources and computational models. ToolUniverse allows an AI agent to coordinate these steps by selecting and combining the appropriate tools. To demonstrate this, we connected ToolUniverse with the Gemini-CLI agent, creating an AI scientist that optimizes chemical compounds to improve their drug-like properties through reasoning. The system was prompted to find safer and more effective alternatives to lovastatin, a drug used to lower cholesterol (Figure 5).

The first step, Target Identification, used literature review and profiling tools to determine which proteins control cholesterol metabolism. The AI scientist searched biomedical literature and databases of drug-target interactions, identifying HMG-CoA reductase as an enzyme involved in cholesterol synthesis. It examined expression patterns across tissues to assess possible side effects, recognizing that inhibition outside the liver could cause unwanted effects. This step mirrors how target discovery is performed in early-stage research.

The second step, Starting Compound Identification, focused on finding drugs that act on the identified target. The AI scientist queried the chemical database through ToolUniverse to retrieve drugs that inhibit HMG-CoA reductase. It selected lovastatin as a suitable starting compound based on its established mechanism and clinical use, noting that its off-target activity arises from high penetration into non-liver tissues.

The third step, Compound Optimization, improved upon the starting compound. The AI scientist accessed the ChEMBL chemical library to retrieve structural analogs of lovastatin and analyzed each analog using predictive models available in ToolUniverse. These included Boltz-2 for estimating binding affinity and ADMET-AI for predicting pharmacological and absorption properties. Combining these models, the AI scientist evaluated dozens of analogs in silico, ranking them by predicted binding strength, selectivity, and safety.

The fourth step, Candidate Validation, assessed the results of optimization. The AI scientist confirmed that its workflow reproduced known findings by identifying pravastatin, a drug with fewer off-target effects than lovastatin, as a strong candidate. It also highlighted a molecule (CHEMBL2347006/CHEMBL3970138) predicted to have stronger binding affinity, reduced brain penetration, and improved bioavailability. The final step, Patent Assessment, verified whether the top-ranked compound had existing intellectual property. Using patent-mining tools within ToolUniverse, the AI scientist located records showing that this molecule was patented for cardiovascular use.

This example demonstrates how ToolUniverse connects language models with scientific tools for drug discovery reasoning. It transforms a general-purpose LLM into an AI scientist that carries out evidence-based analysis in computational drug discovery.

Conclusion and outlook

ToolUniverse provides an environment for constructing and studying AI agents for science. It provides a foundation for connecting language models and reasoning systems with more than six hundred scientific tools through unified communication protocols, registration methods, and execution standards. With ToolUniverse, AI scientists can now operate at scale as collaborators to human researchers: an AI biologist interpreting gene expression data, an AI chemist designing molecules, an AI physicist modeling complex systems, and an AI neuroscientist analyzing brain circuits.

The next phase of ToolUniverse will focus on large-scale benchmarking of scientific reasoning and generalization. We are expanding work to integrate experimental systems, laboratory automation, and physics-based simulators, for agents to function across digital and physical worlds.

The vision for AI agents in science is to build systems that reason over existing knowledge while also generating and testing new hypotheses, bridging natural language, computer language, and physical experimentation. These agents could redefine discovery as a collaborative process between humans and computational intelligence.

To learn more, contribute to ToolUniverse, and join our community, visit the project, paper and code links below.