A Next-Generation Architecture for Elastic and Conditional Computation

December 07, 2023Large foundation models have been used in a variety of applications with varying resource constraints. These applications could be anything from audio recognition on mobile phones to running large foundation models for web applications. To overcome the challenge of adapting models to different resource limitations, we propose MatFormer. This innovative architecture is “natively elastic” – that is, it can be trained once to extract hundreds of smaller, accurate submodels at inference time, without any additional training. MatFormer eliminates the need for developers to release multiple models or resort to inaccurate compression techniques for constraint-aware deployment.

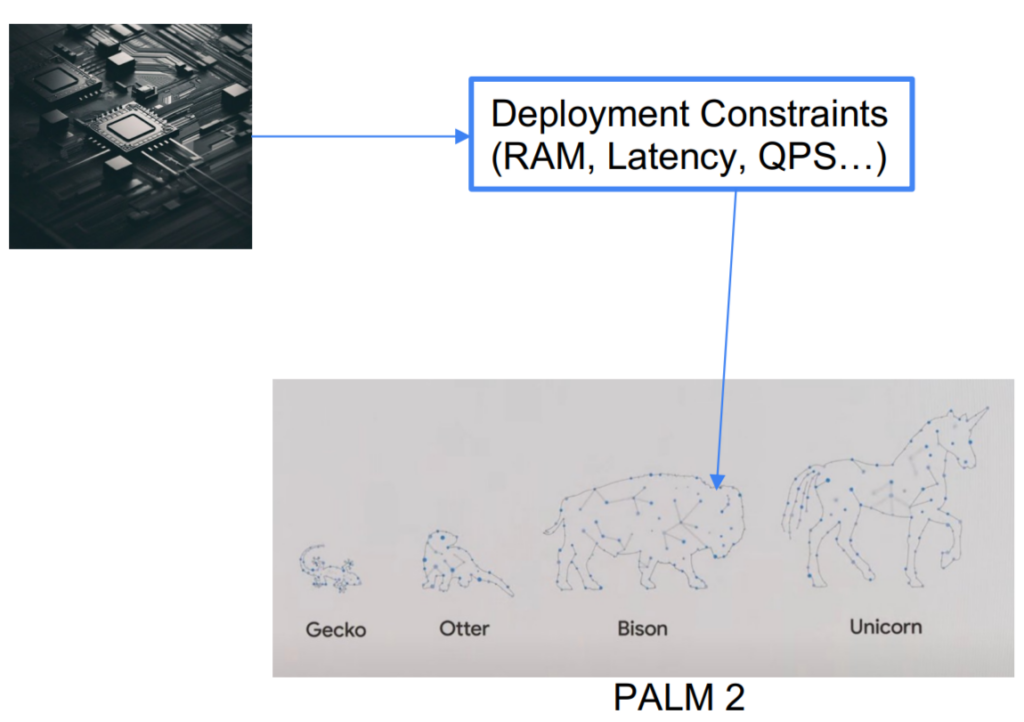

Deploying Large Foundation Models

We illustrate the status quo of model deployment using the example of the Llama series of language models, ranging from 7B to 64B parameters. Consider a scenario where the deployment constraints allow for a 50B-parameter Llama model, but only a 33B variant is available. This forces users to settle for a less accurate model despite having a larger latency budget. While model compression can alleviate this issue, it often requires additional training for each extracted model. Moreover, inference time techniques like speculative decoding (Leviathan et al., 2023) and model cascades require co-locating models on the same device. Training models independently incurs significant overhead for colocation during inference and are not behaviorally consistent with each other which is detrimental to quality.

To make the deployment of these large foundation models amenable to resource constraints, on-device to the cloud, we desire a universal model that can be used to extract smaller yet accurate models for free depending on the accuracy-vs-compute trade-off for each deployment constraint.

MatFormer

We introduce MatFormer, a next generation model architecture that scales as reliably as vanilla Transformers while satisfying these desiderata. We do this by building upon Matryoshka Representation Learning (MRL) (Kusupati et al., 2022), a simple nested training technique that induces flexibility in the learned representations. More specifically, MRL optimizes a single learning objective across g subvectors of a dense vector representation from the model being optimized. These subvectors are created by considering the first k-dimensions of the model representation and are as accurate as baseline models specifically trained for those granularities. MRL sub-vectors that are not explicitly optimized for can also be extracted by picking the necessary number of dimensions, enabling flexible dense representations tailored to specific deployment constraints.

With MatFormer we bring the nested structure of MRL from the activations/representation space to the parameter/weight space. For simplicity, we focus on the Feed-Forward Network (FFN) component of the Transformer block, given that as we scale Transformers up, the FFN can contribute to upwards of 60% of the inference cost.

In the figure below, we illustrate training four different granularities within the Transformer block – XL, L, M, and S. We jointly optimize for these different granularities with exponentially spaced hidden dimension sizes ranging from h to h/8 dimensions. This allows us to reduce the cost of the FFN block by up to 87.5% at inference time. While jointly optimizing multiple granularities results in training overhead, when compared to training these 4 models S, M, L, and XL independently – as is the current norm – MatFormer can be up to 20% faster to train. This can be further improved by employing training optimizations.

While we explicitly optimize for only 4 granularities, we can further extract hundreds of subnetworks that scale linearly with size by using a method called Mix-n-Match. Mix’n’Match involves varying the capacities/granularities of MatFormer blocks across different layers during inference. For instance, the first four layers could be XL, while the next three could be S. This simple approach enables the extraction of a vast array of smaller models tailored to specific deployment constraints, all while following accuracy trends without explicit training.

Results

In our experiments, we show that MatFormer can create scalable decoder-only language models (MatLMs) and vision encoders (MatViT) that can enable accurate and flexible deployment across language and vision domains for generative and discriminative tasks.

For a 2.6B decoder-only MatLM, we find that the optimized smaller models are as accurate as baselines – both on perplexity and downstream evaluations – with even more “free” models obtained using Mix’n’Match that improve predictably with scale.

These smaller MatFormer submodels are significantly more consistent (5-10% across model sizes) with the largest model than independently trained models. That is, the smaller subnetworks make predictions that are closer to that of the largest (XL) model compared to baselines. This (1) enables consistent deployment across scales and (2) boosts inference optimization techniques like speculative decoding (6% speedup over baseline) while keeping the accuracy the same as the XL model.

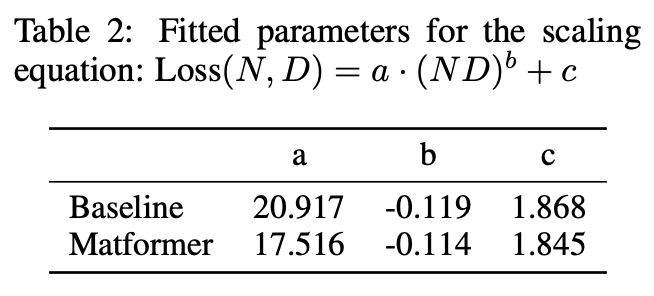

Moreover, we train models at sizes ranging from 70M to 2.6B for up to 160B tokens and find that MatFormer scales as reliably as vanilla Transformers and that we can fit a single scaling law for all MatFormer submodels agnostic to the granularity.

We show that MatFormer works well for modalities other than text – we extend MatFormer to Vision Transformer-based encoders (MatViT), where we also see that we can use Mix’n’Match to extract models that span the accuracy-vs-compute curve.

Finally, MatFormer enables truly elastic adaptive retrieval for the first time. MatFormer allows for elastic query-side encoders based on the resource constraints and the query hardness – potentially impacting large-scale web search. This is possible because MatFormer preserves the metric space, unlike independently trained models which would further need consistency-aware distillation to achieve the same result.

Conclusion

Using MatFormer, we introduce an algorithmic method to elastically deploy large models while being cheaper to train than a series of independent vanilla Transformer models. This method not only allows developers to offer end-users the most accurate models possible but also opens up the possibility of query-dependent conditional computation. In the future, we plan on creating elastic methods that can compress the depth of large models, and create more sophisticated routing algorithms for conditional computation in the MatFormer-powered large foundation models. Overall, MatFormer is an efficient, elastic next-generation architecture that enables web-scale intelligent systems.

We have open-sourced the code for MatViT training along with checkpoints:

Finally, we have utilized the Kempner Institute’s research cluster for public reproduction of MatLM results on the OLMo models up to 1.3B parameters using the Pile corpus – code and models are available below.

We have open-sourced the code for MatViT training along with checkpoints:

Finally, we have utilized the Kempner Institute’s research cluster for public reproduction of MatLM results on the OLMo models up to 1.3B parameters using the Pile corpus – code and models are available below.