Anyone who plays computer games probably knows that GPU stands for graphics processing unit: a computer equipped with one of these processors can render smooth, high-resolution graphics for games and video applications. The same computing power that enables smooth graphics also turns out to be really useful for AI.

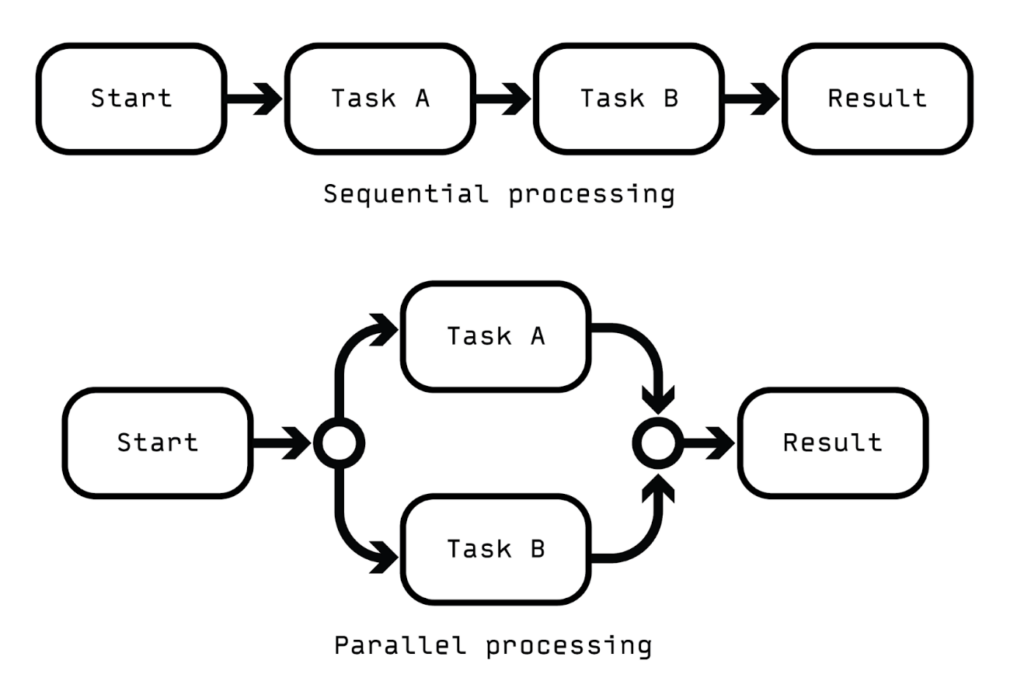

The key is that a GPU can do many simple tasks simultaneously, an ability known as parallel processing. This is in stark contrast to a standard central processing unit (CPU), which does only a handful of calculations at a time and is optimized to perform them in step-by-step sequences (Figure 1).

Artificial Neural Networks: The Workhorses of Modern AI

Parallel computation turns out to be crucial for running and training modern artificial neural networks (ANNs), computer programs that are the workhorses of the ongoing AI revolution. ANNs are able to extract hidden patterns in data. If the dataset is large enough, the patterns that an ANN extracts can be used to emulate human skills, including face recognition and the production of art, music, and language.

The design of ANNs was inspired in part by real brains. The human brain contains around 80 billion neurons that operate simultaneously in complex networks. The artificial counterpart of a biological neuron is an artificial neuron, which is a processing unit made of computer code. Networking these artificial neurons together gives rise to an ANN.

Until fairly recently, ANNs were run on CPUs, so despite being made up of software “neurons,” they did not really capitalize on their potential for parallel processing. By running them on GPUs, which allow for parallel processing, researchers are not just making ANNs faster, they are also bringing them into closer alignment with their biological counterparts.

Leveraging the Power of GPUs to Train and Run ANNs

So how do computer programmers successfully and efficiently use GPUs and the power of parallel processing to train and run ANNs? The trick for programmers is to figure out how to divide up a big computation into sub-problems that can be pursued in parallel.

An extended analogy might help here. Imagine that a GPU is like a chef working at a restaurant. Each chef is part of a team within the kitchen. A GPU cluster is like a restaurant with multiple teams of chefs, each working at a dedicated station.

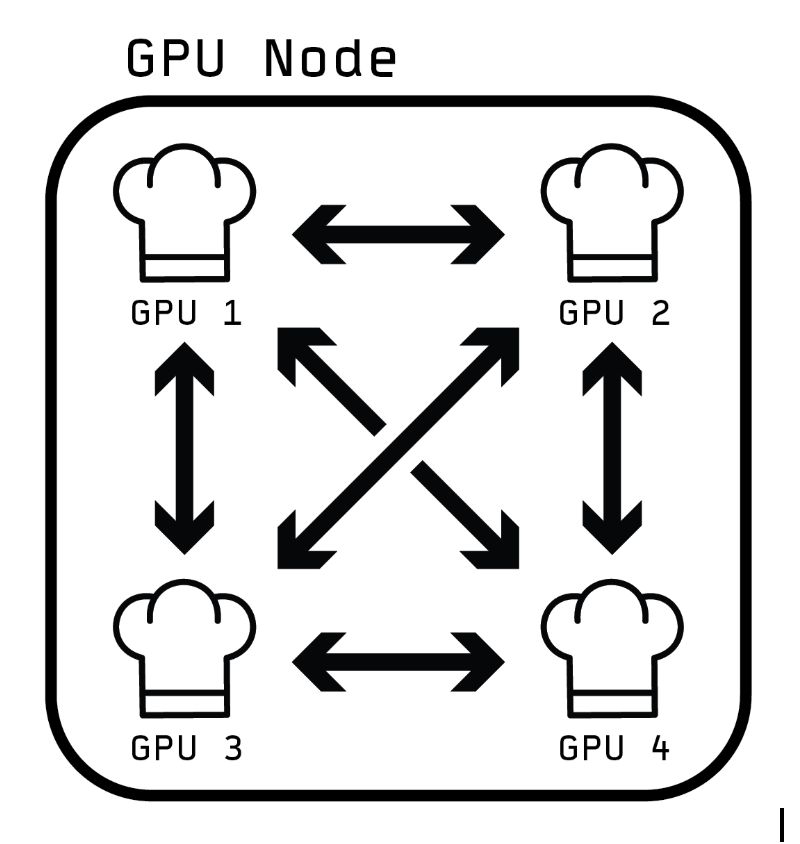

Each team of chefs at a station is analogous to a GPU “node,” which is two or more GPUs connected together (Figure 2). Just as the timing and allocation of various tasks are essential for efficient performance in a busy kitchen, the way that tasks are allocated and timed within and among nodes is critical for optimal GPU cluster performance.

For example, in a kitchen, each team of chefs performs a distinct task, and within each team, each chef performs a different sub-task. A task might be making a specific dish, and a sub-task might involve prepping an ingredient or making a sauce for that dish. Sub-tasks can often be done at the same time, in parallel. But ingredients need to be brought together eventually, and this happens in a specific order for each dish. Similarly, different dishes need to be brought together when a complete meal is served. In an efficient kitchen, every task and sub-task is completed at the right time: the onions are sliced and ready to go when the oil is hot. And no one is just standing around waiting for someone else to finish their job: every chef’s time is used optimally.

Similarly, in an efficiently-used GPU cluster no GPU “chef” is left idling for long. The computational tasks are allocated so that a processor rarely has to wait around for others to complete their work. In this way, computing power is used as efficiently as possible.

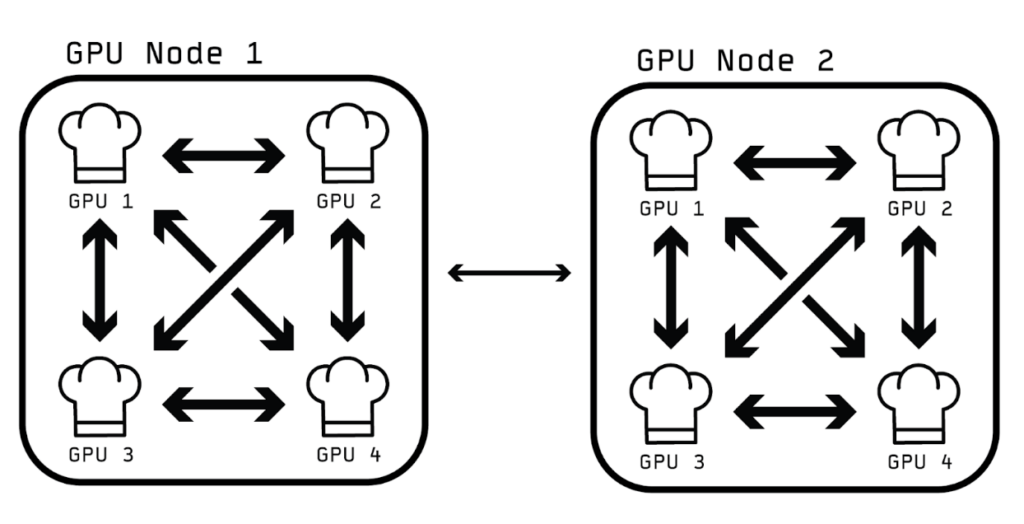

In a GPU cluster, tasks and subtasks must be allocated to make the most efficient use of communication both between individual GPUs and between the nodes. In many clusters including the Kempner AI cluster, the data transfer rates are faster within nodes than between them (Figure 3).

This is analogous to saying that chefs can communicate more quickly with other chefs within their teams than with chefs in other teams. Allocating jobs efficiently means making sure that activities that involve frequent collaboration happen among nearby GPUs – those within the same node, whenever possible. This is the key engineering challenge when allocating an ANN algorithm to a GPU cluster: how to optimally allocate tasks so that the slower node-to-node transfers happen only when absolutely necessary.

So for jobs that are parallelizable, some sub-tasks can run on their own for a while, but eventually the results of these sub-tasks have to be brought together to complete higher-level tasks. And in the interest of efficiency, each GPU “chef” should not be waiting around for its colleagues to do their bit: a good algorithm plans out which workers are likely to finish tasks quickly, allocating new jobs to them rather than letting them stay idle.

At a Glance: The Kempner’s GPUs

The Kempner AI Cluster has 144 Nvidia A100 40GB GPUs and 384 Nvidia H100 80GB GPUs, making it one of the largest academic AI clusters in the world.

The Kempner’s H100s can perform 16.29 petaflops, making it the 85th fastest supercomputer in the world, as well as the 3rd fastest academic supercomputer in the world, as assessed by the independent TOP500 organization in November, 2024.

The Kempner’s H100s operate at an efficiency of 48.065 gigaflops per watt of power used, making it the 32nd fastest green computer in the world according to the Green500 list, as assessed by TOP500 in November 2024.

Learn more about GPUs

- The Kempner Institute organizes regular workshops on topics such as how to work with GPUs, including the network of GPUs that make up the Kempner AI Cluster. Sign up for our weekly newsletter for workshop updates.

- For more info on machine learning, data architecture, GPUs, and high performance computing topics, check out the Kempner Computing Handbook.

- The Intro to Parallel Computing section of the Kempner Computing Handbook explains how GPUs work, helping researchers figure out how to break up their machine learning algorithms into (giga)byte size chunks that can be performed in parallel, and also how and when to bring the parallel streams together.

- For more information on computing resources and the Kempner’s dedicated Research & Engineering Team, check out the Kempner’s compute page.

About this series

Kempner Bytes is a series of explainers from the Kempner Institute, related to cutting edge natural and artificial intelligence research, as well as the conceptual and technological foundations of that work.

About the Kempner Institute

The Kempner Institute seeks to understand the basis of intelligence in natural and artificial systems by recruiting and training future generations of researchers to study intelligence from biological, cognitive, engineering, and computational perspectives. Its bold premise is that the fields of natural and artificial intelligence are intimately interconnected; the next generation of artificial intelligence (AI) will require the same principles that our brains use for fast, flexible natural reasoning, and understanding how our brains compute and reason can be elucidated by theories developed for AI. Join the Kempner mailing list to learn more, and to receive updates and news.