Compute

The Kempner Institute’s state-of-the-art AI cluster and its dedicated team of research scientists and research engineers support advanced research on intelligence from biological, cognitive, and computational perspectives.

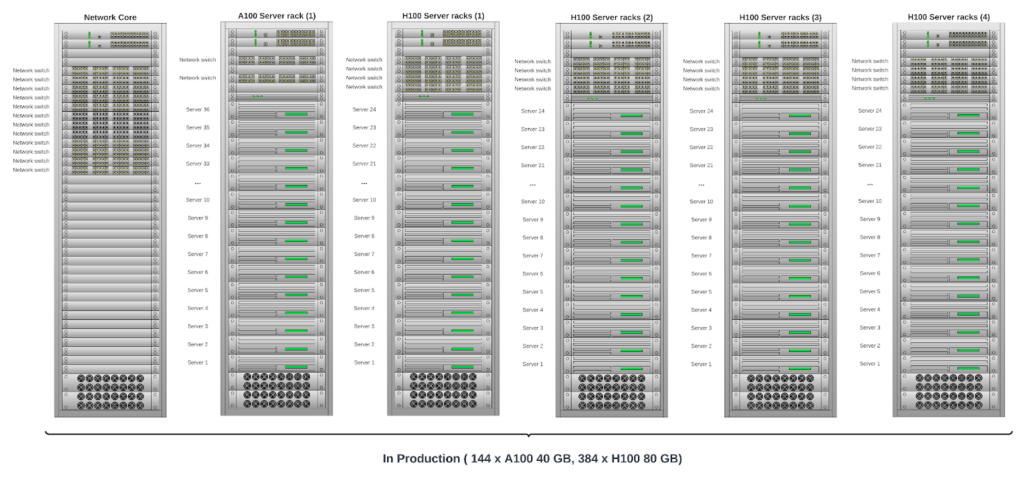

Hardware

The Kempner Institute AI cluster opened with an initial pilot installation in Spring 2023. In October 2023, the Kempner Institute announced that it had purchased additional Nvidia H100 GPUs to be added to its AI Cluster.

The Kempner AI cluster is now fully operational with 144 Nvidia A100 40GB GPUs and 384 Nvidia H100 80GB GPUs. It is one of the largest academic AI Clusters in the world, equivalent to over 1300 Nvidia A100 GPUs in terms of FP32 TFLOPS.

The cluster uses a group of NVIDIA Quantum InfiniBand switches to create a non-blocking InfiniBand network connection between all GPUs, with a 400 Gbps InfiniBand NDR connection between H100 GPUs and a 200 Gbps InfiniBand HDR connection between A100 GPUs. The non-blocking network connection provides high-speed connectivity between GPUs to facilitate training and fine-tuning Large Language Models (LLMs). The dedicated network core provides non-blocking network connectivity for up to seven H100 racks, totaling 672 H100 GPUs.

The computing power of the Kempner AI cluster enables training of the popular Meta Llama 3.1 8B and Meta Llama 3.1 70B with one trillion tokens in about two weeks and three months, respectively.

Research & Engineering Team

About

The Kempner Institute has assembled a Research & Engineering team, led by Kempner Senior Director of AI/ML Research Engineering Max Shad. The Research & Engineering team is a cross-disciplinary group of research scientists and research engineers covering a wide spectrum of research areas and engineering knowledge. With deep expertise in computational neuroscience, machine learning, engineering and high-performance computing, the team is uniquely positioned for novel research in large-scale natural and artificial intelligence research.

The R&E team consists of ML Research Scientists, ML Research Engineers, GPU Computing Engineers, Data Architects, AI Research Computing Engineers, Project Managers, and Engineering Fellows/Interns. These experts have a range of skill sets, and undertake projects on a engineering/research continuum.

The team works as a collaborative unit and engages directly with the Kempner’s faculty, fellows and students. Together, they apply insights from cutting-edge scientific research in AI architectures and computing hardware architectures. This includes developing novel AI approaches that may provide insight about the brain to training and fine-tuning large language models, optimizing their performance at scale. The team draws on specializations in computational neuroscience, machine learning, scientific programming, software engineering, cloud computing, and GPU architecture.

Advancing the state-of-the-art through open science

The Research & Engineering team leads the Kempner’s efforts to operationalize its commitment to open science by curating data sets, creating reproducible models, and ensuring all Kempner science and research is publicly accessible. This work is essential to ensuring Kempner research is available for the larger academic research community to leverage and build upon, thus advancing the state-of-the-art in the field of intelligence research.

The Kempner’s Research & Engineering team’s overarching role is to:

- Operationalize the Kempner’s mission to advance the state-of-the-art by moving things from small scale prototypes to large scale disseminated science.

- Teach Kempner researchers and students best practices for utilizing the Kempner AI cluster, empowering them to make the most effective possible use of the Kempner’s hardware and ensuring their ability to share their open-source work on the Kempner’s GitHub and Hugging Face channels.

- Collaborate internally within the R&E team, as well as across Kempner labs and research teams, to operationalize and advance the Kempner projects.

- Preserve and provide access to the Kempner’s institutional memory, and apply it in practice to new projects and across the Kempner research community.

Green High Performance Computing

The Kempner’s AI cluster resides in the Massachusetts Green High Performance Computing Center (MGHPCC), a modern datacenter in western Massachusetts shared by the Boston-area universities.